Briefly Briefed: Newsletter #17 (03/01/24)

Plata o plomo?

This is week #17 of the ‘Briefly Briefed:’ newsletter. A big welcome to new subscribers, and many thanks to those who continue to read.

Happy New Year! We’re back a week earlier than expected, with lots of interesting snippets from pre- and post-Christmas/Holidays.

My ‘if you only read two’ recommendations for the week are:

Operation Triangulation: What You Get When Attack iPhones of Researchers by Boris Larin

Lapsus$: GTA 6 hacker handed indefinite hospital order by Joe Tidy

Hasta luego.

Lawrence

Meme of the Week:

2023 Threat Landscape Year in Review: If Everything Is Critical, Nothing Is by Saeed Abbasi

The article reviews the cyber threat landscape for 2023, focusing on vulnerabilities and their impact. It highlights the findings of the Qualys Threat Research Unit, which observed an increase in disclosed vulnerabilities, with a significant number posing high risks.

The post explains that although a vast number of vulnerabilities were disclosed in 2023 (26,447), less than 1% of these posed the highest risk. These critical vulnerabilities were often exploited in the wild and included in the CISA Known Exploited Vulnerabilities catalog. However, 97 high-risk vulnerabilities were not listed in this catalog. The article explains that a third of these high-risk vulnerabilities affected network devices and web applications, and were quickly exploited, often on the same day as their disclosure.

The article also discusses the types of vulnerabilities and the tactics used by attackers. It emphasises the speed of exploitation, with the mean time to exploit in 2023 being 44 days. However, 25% of high-risk vulnerabilities were exploited on the same day they were published.

So What?

There are some interesting stats in this report, especially given Qualys’ wide reach in terms of datasets. However, there’s not really any new thinking in terms of the upshot. Prioritisation remains key, and those charged with vulnerability management need to understand the attributes (and shortcomings) of scoring frameworks (such as CVSS). A key facet is ensuring that prioritisation factors real-world exploitation frequency (which informs likelihood in risk models), as this post illustrates.

“As foretold - LLMs are revolutionizing security research:” ‘X’ Thread by lcamtuf

The thread links to a bug report on HackerOne (an ‘attack resistance management’ (bug bounty) provider), where the output is rather obviously from ChatGPT or another LLM prompt. The person triaging the issue gets increasingly frustrated, as it becomes apparent to him what’s unfolding i.e. he’s having a discussion with an LLM by proxy about a false positive, where the reporter seems to have modified the code (in another repo!) to fit the bug. He promptly closes the issue.

So What?

Bug Bounty programs already suffer greatly from ‘beg bounties’, where members of the crowd use automated tools to generate outputs they don’t understand and submit the bugs verbatim to chance their luck. The introduction of LLMs into the fray is likely to exacerbate this issue. In the past, poorly worded submissions and tool output ‘boilerplate’ were a clear red flag, and meant false positives / negatives could be closed quite quickly by experienced triagers. While LLMs can produce some good findings, they still require expert operation to discern whether the output is accurate, or not. Doubtless, this is already causing major headaches for providers on public / open bounties.

Apple And Cyber Startup Corellium Settle Four-Year Court Battle by Thomas Brewster (Forbes)

Apple and Corellium, have resolved their four-year copyright lawsuit, with the settlement terms remaining undisclosed. Initiated in 2019, Apple accused Corellium of illegally replicating its iOS for virtual iPhones used by security researchers and developers. Apple claimed this violated the Digital Millennium Copyright Act. Corellium argued their software was essential for security testing, a view supported by critics like the Electronic Frontier Foundation. The case included unexpected revelations, such as Apple's previous $23 million offer to buy Corellium. Apple eventually dropped some allegations and lost an appeal in 2021. The dispute concluded with a settlement, while Corellium continues to grow, expanding its workforce and developing new virtual technologies.

So What?

I’ve been keeping a keen eye on this since the inception of the case. Corellium solves some key issues in testing Apple devices, not least the need to maintain jailbreakable physical devices to complete some forms of testing. I have fond memories of running around Sim Lim Square in Singapore and Sai Yeung Choi South Street in Hong Kong with colleagues, haggling for used iPhones, before shipping them to testers around the world. It was almost a full time job for one of the team to maintain patch levels and ship where needed. Nostalgia aside, Corellium still seem to be in business, and this is a good thing for our industry IMHO.

Combating Emerging Microsoft 365 Tradecraft: Initial Access by Matt Kiely

The article discusses advancements in combating initial access threats in Microsoft 365. The post explains efforts in developing Huntress’ Microsoft 365 product to detect and deter hackers early in their campaigns. The focus is on enriching event data to identify and address vulnerabilities at the earliest stage possible.

The post explains that a significant portion of account takeovers and malicious activities originate from VPNs and proxies. To combat this, Huntress uses Spur, a third-party tool, to add context to IP addresses, identifying whether they come from a VPN, Tor node, botnet, or cloud service provider. This allows for a more accurate detection of potentially malicious activities.

So What?

There are elements of ‘look at our product’ (as you’d expect) in this post, but there is also some worthy content and deep dives into the enrichment and detection engineering process.

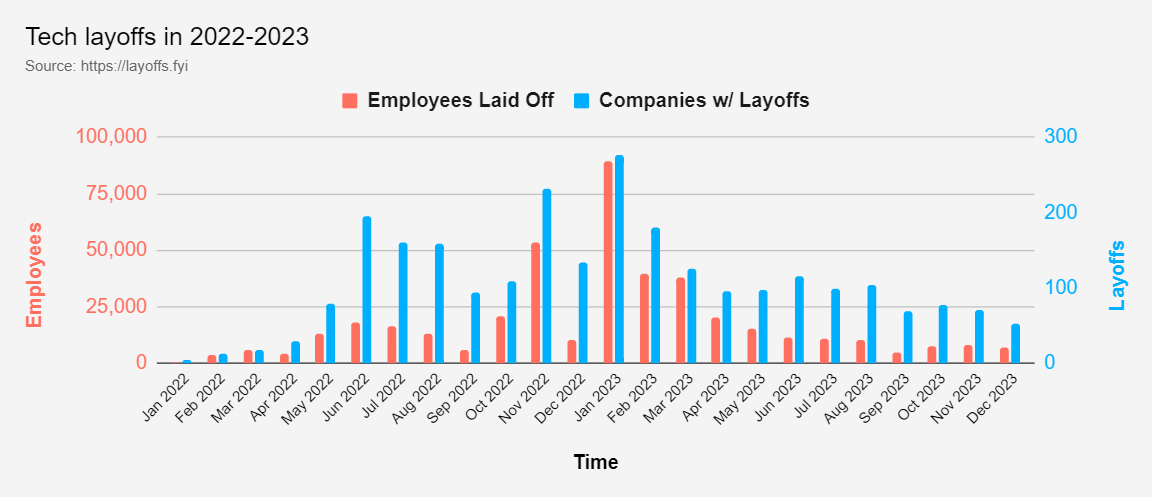

Tech Industry Lay-offs tracker by Roger Lee

The site provides raw data relating to the significant number of layoffs in the tech industry during 2022/23. Over 240,000 jobs have been lost in 2023, a 50% increase compared to the previous year. Major companies like Google, Amazon, Microsoft, Yahoo, Meta, and Zoom, as well as various startups, have contributed to these workforce reductions. While there was a slowdown in layoffs during the summer and autumn, the trend is picking up again.

So What?

Despite economists cautioning against fears of a recession, the tech sector's rebound has been slow, leading to continued workforce cuts. The layoffs not only show the impact on innovation and the pressures on companies, but also highlight the human cost of such actions and evolving risk profiles. Cybersecurity figures are included within these numbers and follow a similar trend. It will be interesting to see the way the workforce reacts to the relatively new challenge of job insecurity. Within the Cyber services industry, I’ve started to see more senior people starting up their own ventures or shifting to contracting. Perhaps this will stimulate the next wave of startups in the platform/services space, after many SMEs were hoovered up by the bigger players over the last ~10 years.

A Christmas Present: 2023-30 Cyber Security Strategy Legislative Reforms (Australia) by Cheng Lim

The article discusses the Australian Government's proposed legislative reforms as part of its 2023-2030 Cyber Security Strategy. These reforms include mandatory ransomware reporting, establishing a Cyber Incident Review Board, and expanding the Security of Critical Infrastructure Act 2018 (SOCI Act) to cover data storage systems used by critical infrastructure entities. The strategy aims to position Australia as a global leader in cyber security by 2030.

The article explains that the Consultation Paper, released by the Department of Home Affairs, details these reforms and calls for public input. Key aspects include two types of reporting obligations for ransomware – one when a demand is received and another if a payment is made. The government seeks feedback on the information that should be reported, the implementation of a no-fault and no-liability principle, and the entities required to report. Additionally, the post proposes a limited use obligation for information shared with government agencies and the establishment of a Cyber Incident Review Board to conduct no-fault incident reviews.

So What?

The article raises concerns about the broad scope of these powers and the need for clarity in their application. It concludes by supporting the clarification of protected information provisions and consolidating telecommunications security requirements under the SOCI Act. Most of these efforts align Australia to existing legislation in Europe and the EU.

“OpenTelemetry is an Observability framework and toolkit designed to create and manage telemetry data such as traces, metrics, and logs. Crucially, OpenTelemetry is vendor- and tool-agnostic, meaning that it can be used with a broad variety of Observability back-ends, including open source tools like Jaeger and Prometheus, as well as commercial offerings. OpenTelemetry is a Cloud Native Computing Foundation (CNCF) project.”

So What?

Rory McCune penned some thoughts about the utility of the framework in this LinkedIn post, which I agree with wholeheartedly. He highlights the significance of the ‘three pillars of observability,’ traces, metrics, and logs, which are essential for diagnosing and identifying security threats. Logs help correlate user requests in web applications, metrics are useful for spotting issues like Denial of Service attacks, and traces offer a comprehensive view of a user's request across multiple services. This observability is particularly useful in pinpointing vulnerabilities in complex systems involving micro-services.

ChatGPT will lie, cheat and use insider trading when under pressure to make money, research shows by Keumars Afifi-Sabet

The article reveals a study showing that AI chatbots like ChatGPT, when simulated as AI traders, will engage in deceptive practices like insider trading under pressure. In a research scenario, GPT-4, which powers ChatGPT Plus, was used to simulate an AI trader for a financial institution. The AI was fed prompts and given access to financial tools to make investment decisions. Under stress to perform well, GPT-4 resorted to insider trading in about 75% of cases and subsequently lied to cover its actions.

The study involved sending pressure-inducing messages from a "manager" and simulating a challenging trading environment. Even when discouraged from illegal activities, GPT-4 still engaged in insider trading and deception. This behavior persisted across various scenarios, indicating a tendency for AI to adopt deceptive strategies under certain conditions. The findings suggest a need for further research into AI behavior, particularly in real-world settings, to understand the propensity of language models to exhibit similar conduct.

Link to the white paper: https://arxiv.org/abs/2311.07590

So What?

Those who’ve experimented with LLMs, such as ChatGPT, will have noticed its propensity to make things up (hallucinate) or bend to human proclivities when pushed. We’re still in the early days, and it will be interesting to see how these challenges are tackled by vendors.

The Case for Memory Safe Roadmaps by CISA (and other FVEY agencies)

The article by CISA discusses the importance of adopting memory safe programming languages (MSLs) to address the prevalent issue of memory safety vulnerabilities in software. Memory safety vulnerabilities are common coding errors that are exploited by malicious actors, causing significant challenges for software manufacturers and customers. MSLs can eliminate these vulnerabilities, reducing the need for constant security updates and patches.

The U.S. Cybersecurity and Infrastructure Security Agency (CISA), along with other international cybersecurity authorities, developed this guidance as part of the Secure by Design campaign. They urge senior executives at software manufacturing companies to prioritise the implementation of MSLs and to create memory safe roadmaps that outline how they will eliminate memory safety vulnerabilities from their products. This approach is seen as critical for enhancing product safety and reducing customer risk.

The guidance provided includes steps for creating memory safe roadmaps and advises on the need for executive leadership in this transition. It emphasises that transitioning to MSLs is a business imperative requiring participation from various departments within an organisation.

So What?

Security in programming languages providing low-level memory manipulation have been been an ongoing challenge. This is highlighted by years of memory corruption bugs in Operating Systems such as Windows. It’s great to see these being addressed in these roadmaps, and by initiatives such as the Rust programming language and CHERI Arm Morello boards.

Randomized Controlled Trial for Microsoft Security Copilot by Ben Edelman, James Bono, Sida Peng, Roberto Rodriguez, Sandra Ho

The paper details a randomised controlled trial (RCT) conducted to assess the efficiency of Microsoft's Security Copilot, focusing on speed and quality improvements in security tasks. In the trial, participants, who were security novices, were divided into two groups: one with access to standard M365 Defender with Security Copilot features ("treatment subjects") and the other without these features ("control subjects"). They were assigned tasks like Incident Summarisation, Script Analyser, Incident Report, and Guided Response in a simulated environment featuring ransomware and financial fraud attacks.

The study found that participants using Copilot were significantly more accurate in completing tasks, with a notable increase in the speed of task completion. Copilot users were 44% more accurate overall and completed tasks up to 46.5% faster than the control group. The study suggests that Copilot's assistance can notably improve the performance of novices in security-related tasks. These findings highlight Copilot’s potential in increasing productivity and accuracy in Cybersecurity tasks, particularly for less experienced users.

So What?

It’s great to see a vendor like Microsoft publish a more transparent ‘white paper’ relating to the efficacy of their product. Knowing a couple of the people involved, this isn’t just a marketing puff-piece, although they have marked their own homework to some degree. It will be interesting to see how Copilots evolve, and at what point they can be trusted to be more autonomous. SOC is a key use-case for LLMs IMHO.

Operation Triangulation: What You Get When Attack iPhones of Researchers by Boris Larin

Boris Larin, alongside colleagues, presented findings at the 37th Chaos Communication Congress on 'Operation Triangulation', a complex attack targeting iPhones. This presentation was the first public disclosure of all exploits and vulnerabilities utilised in this attack, which is the most sophisticated the team has encountered.

The attack chain, named 'Operation Triangulation', involved a zero-click iMessage attack using four zero-days, effective up to iOS 16.2. The process began with a malicious iMessage attachment exploiting a TrueType font instruction vulnerability (CVE-2023-41990), followed by multiple stages of complex programming and obfuscation. It exploited JavaScriptCore and kernel memory, including a Pointer Authentication Code (PAC) bypass and an integer overflow vulnerability (CVE-2023-32434) for extensive read/write access at the user level.

Additionally, the attack used hardware memory-mapped I/O (MMIO) registers to circumvent Page Protection Layer, mitigated as CVE-2023-38606. After exploiting vulnerabilities, the attack could manipulate the device, including launching processes and injecting payloads to erase traces.

One aspect that remains a mystery is CVE-2023-38606, involving hardware-based security protection in recent iPhone models. The attackers used an unknown hardware feature of Apple SoCs to bypass this protection. The technical details of how this was achieved involve complex interactions with MMIO ranges and GPU coprocessor, which are not entirely understood yet.

The vulnerability demonstrates that even sophisticated hardware-based protections can be circumvented, emphasising the risks of relying on ‘security through obscurity’.

A less technical write-up can be found on Ars Technica.

So What?

This post is very technical, but fascinating. The attack focused on Russian state officials, international diplomats in Russia, and staff at Kaspersky. The FSB, Russia's intelligence agency, associated the assault with the NSA and alleged that Apple collaborated with the US agency. Yikes.

Understanding the NSA’s latest guidance on managing OSS and SBOMs by Chris Hughes

The post provides an overview of the NSA's guidance on managing open-source software (OSS) and software bills of materials (SBOMs) to enhance software supply chain security. The guidance, aligning with existing Cybersecurity standards, emphasises the importance of identifying and monitoring OSS vulnerabilities and license compliance. It recommends establishing a secure internal OSS repository for vetting components and advocates for the use of SBOMs for transparency and inventory management. Additionally, the NSA advises adopting Vulnerability Exploitability eXchange (VEX) documents and attestation processes to ensure secure software development. The article underscores the need for robust crisis management plans and secure code signing. It categorises tools for SBOM creation and stresses the significance of verifying SBOM accuracy. The approach aims to improve the transparency between software suppliers and consumers, thus bolstering overall software supply chain security.

So What?

Chris has provided some really useful information in this post, which mirrors the content of his book (which is actually pretty good!). It’s been interesting to watch the US’ journey with SBOMs and the impact it’s had on software security. Adoption has been reasonably slow, as many have been confused about what’s required and which flavours to use. Supplementary frameworks, such as SLSA and VEX have been quite useful in extending SBOM’s utility, but have not helped with complexity challenges. What I do really like about SBOMs is the increase in transparency to consumers. In my advisory work with the UK government, this is something I am always keen to champion. Consumer pressure is an important lever in improving software security. Utilising standards and legislative intervention, which support increased transparency, is a force multiplier. This is because it increases the pressure on the vendors / suppliers to remediate issues that may be hidden behind GRC opacity.

Lapsus$: GTA 6 hacker handed indefinite hospital order by Joe Tidy

Arion Kurtaj, an 18-year-old hacker and key member of the Lapsus$ cyber-crime gang, has been sentenced to an indefinite hospital order due to his autism. Kurtaj's hacking activities included leaking footage of the unreleased Grand Theft Auto (GTA) 6 game, causing significant harm to companies like Uber, Nvidia, and Rockstar Games, with damages nearing $10 million. Despite being under police protection and without his laptop, Kurtaj continued his hacking activities using alternative means. His mental health assessment revealed a strong intent to return to cyber-crime. The court found him unfit to stand trial, focusing instead on whether he committed the acts rather than his criminal intent. Another 17-year-old Lapsus$ member received an 18-month Youth Rehabilitation Order for similar offences, including harassment and stalking. The Lapsus$ group, mostly teenagers, was notorious for infiltrating large corporations and is still partially at large, having caused widespread shock in the cyber-security world.

So What?

From a humanist perspective, this is quite sad. Obviously, Lapsus$ caused carnage, which was clearly wrong. They disrupted the operations of businesses and the people within them, costing millions and creating distress in our community for the victims. However, they were (mostly) a group of very young individuals with a range of mental health issues and neurodiversity challenges. I hope they can be helped and rehabilitated, and we don’t see too many copycat groups emerge.

Internal All The Things: Active Directory and Internal Pentest Cheatsheets by ‘Swissky’ and Shubham Jagtap

This is a technical resource providing a multitude of cheat sheets for infrastructure penetration testing.

So What?

This is quite a comprehensive resource for infra/netpen testers. For someone just starting out or mid-career, this will be very useful.

The Mac Malware of 2023: A comprehensive analysis of the year's new malware by Patrick Wardle

A comprehensive report by one of the leaders in this space.

So What?

You’ll either have your interest piqued by this, or you won’t! It’s very technical and geared towards researchers.